Other products

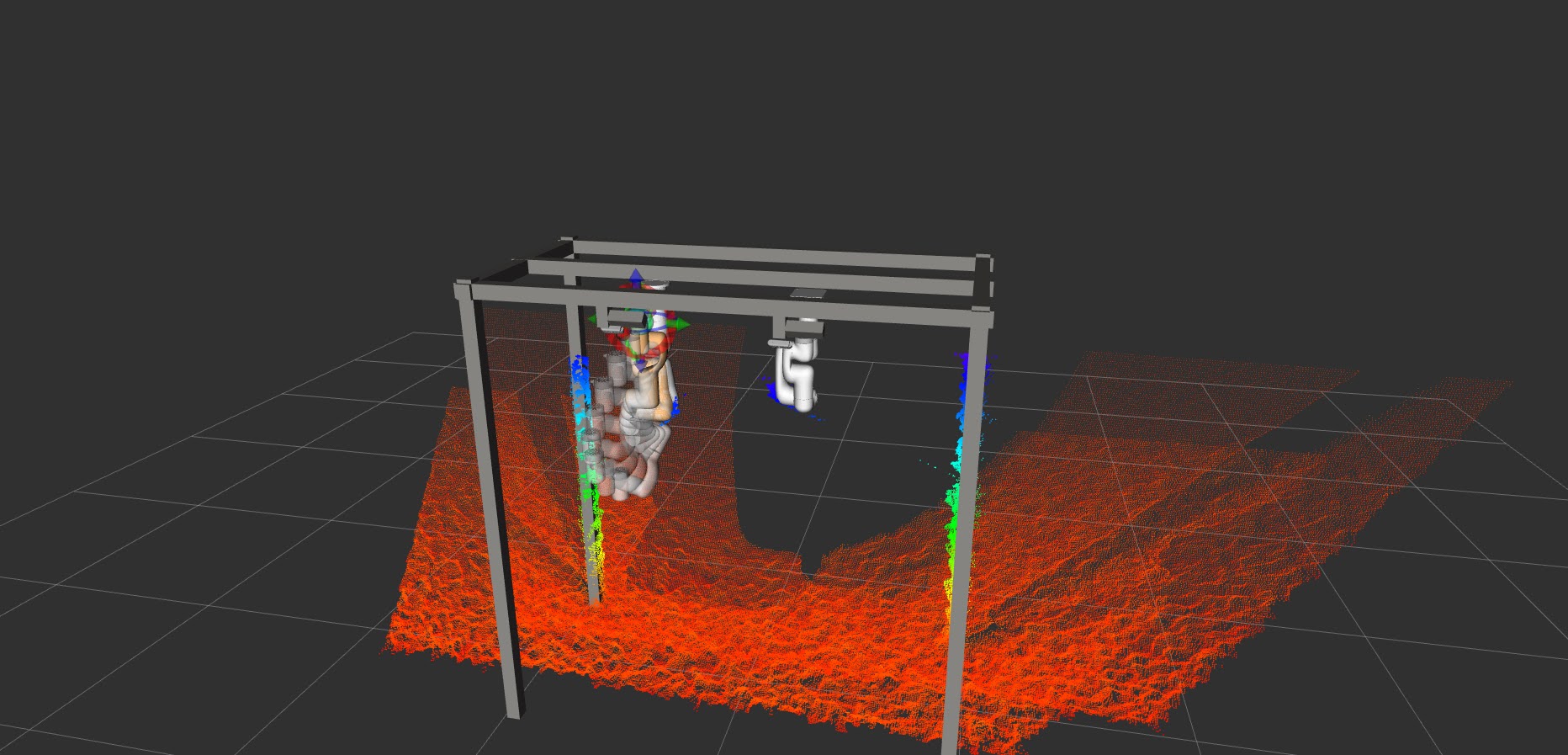

ROBOTCORE Collaborative

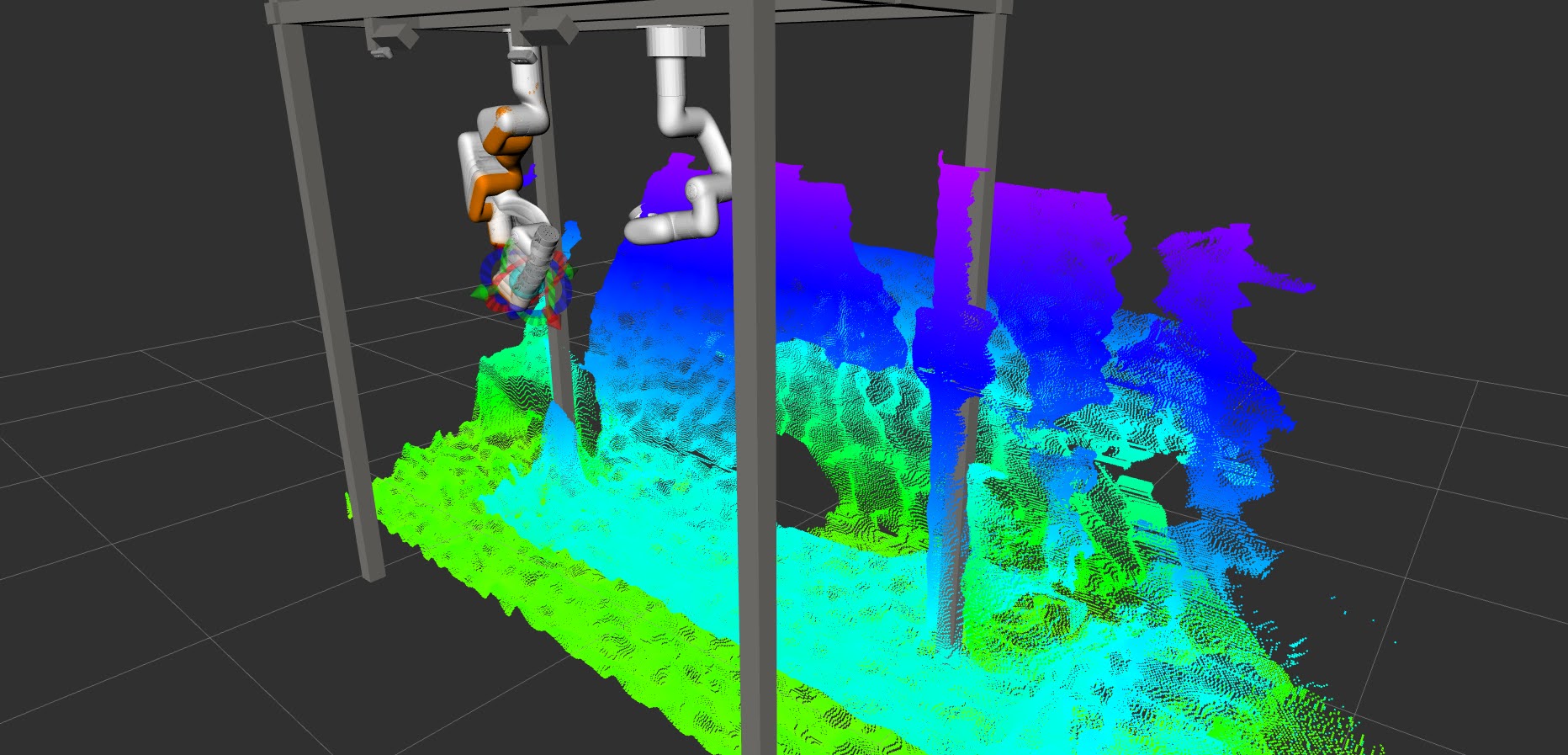

Dynamic collision avoidance:

Advanced Human-Robot Collaboration with Accelerated Adaptive Evasion

ROBOTCORE® Collaborative is an innovative Human-Robot Interaction (HRI)-centric hardware-accelerated control approach that ensures seamless and safe collaboration between robots and humans delivering dynamic collision avoidance. By leveraging robot perception and advanced FPGA technology, it provides unparalleled determinism and rapid response times, crucial for preventing collisions and aggressive behaviors in shared workspaces between humans and robots. This cutting-edge solution is fully API-compatible with the ROS 2 control stack, allowing for easy integration into existing robotic systems while significantly enhancing their collaborative capabilities.

Get ROBOTCORE® Collaborative Benchmarks