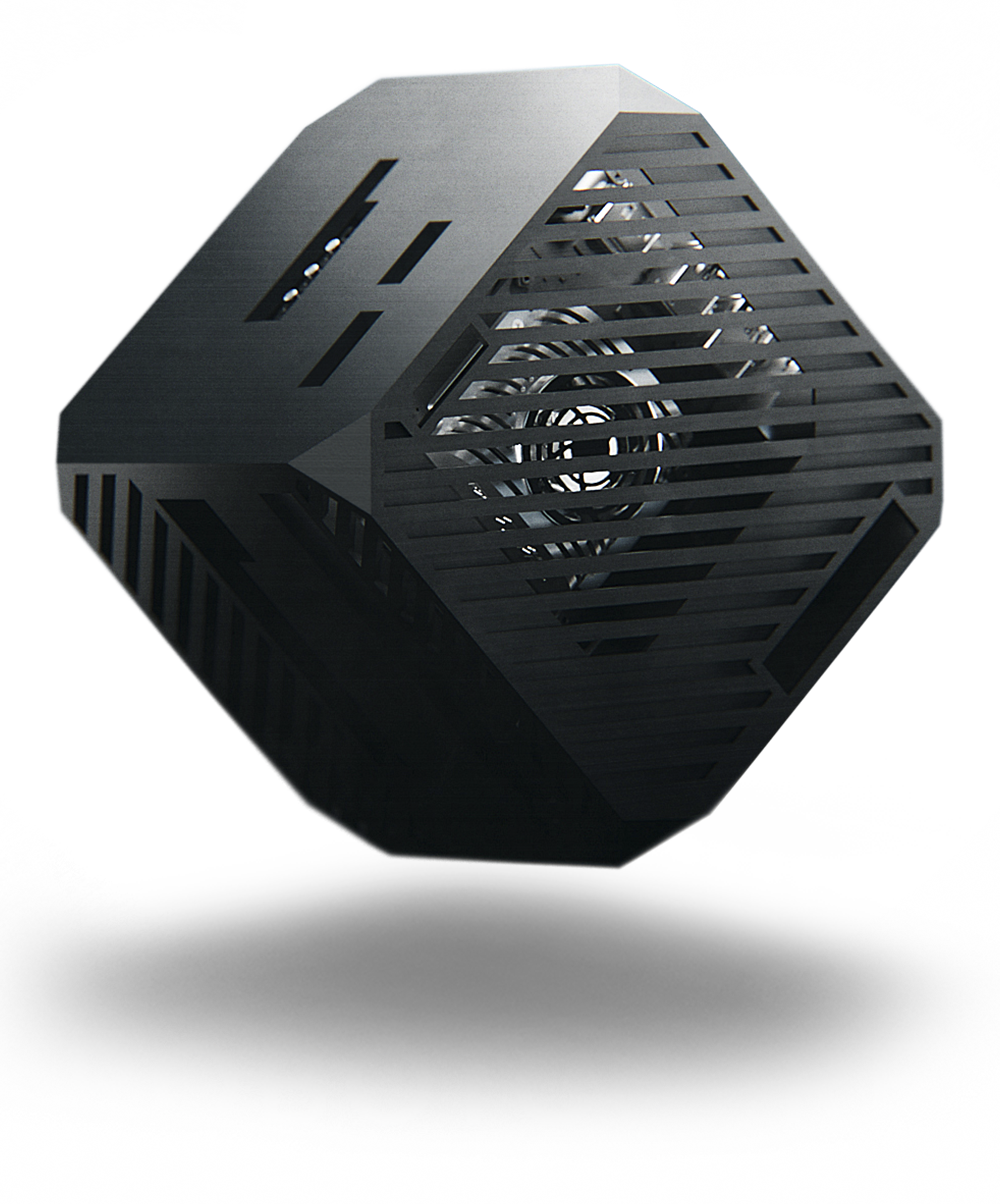

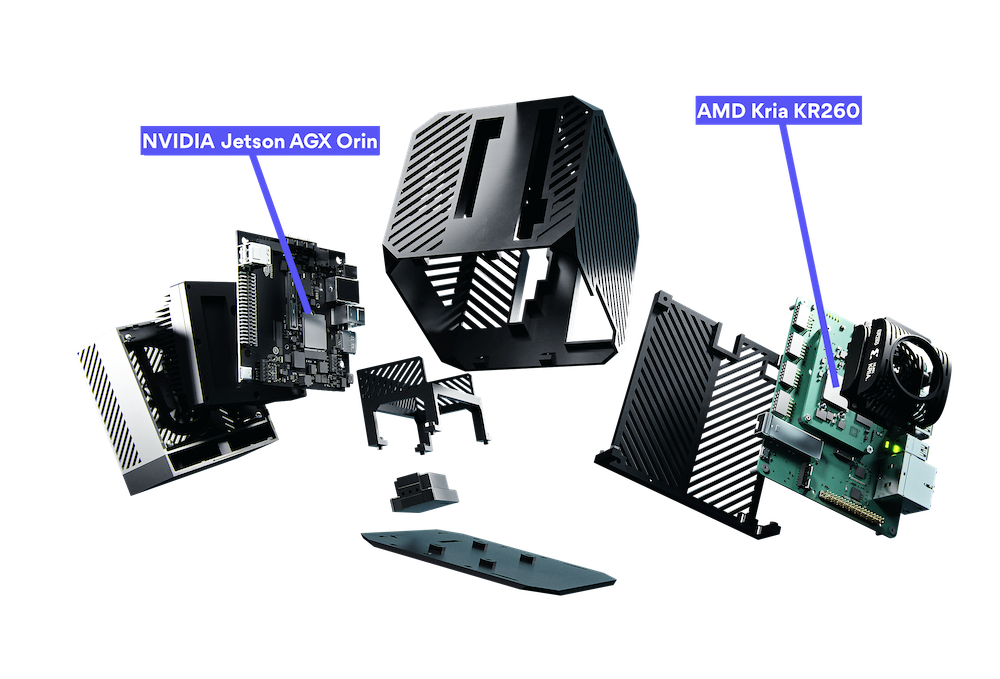

ROBOTCORE® RPU

The Robotic Processing Unit

specialized in ROS computations

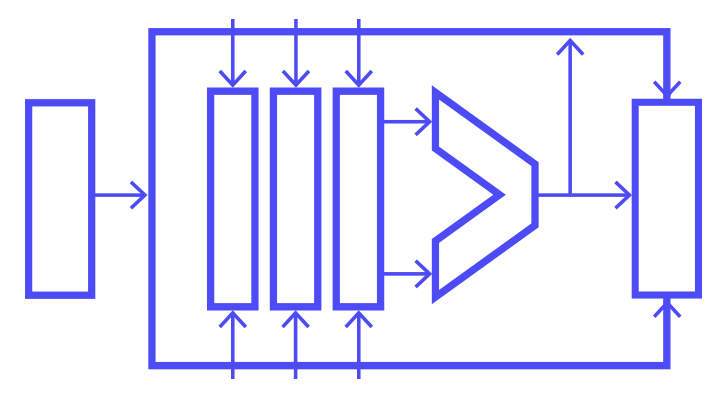

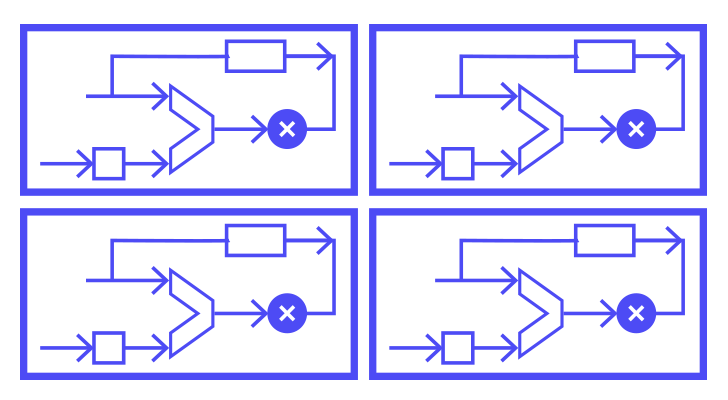

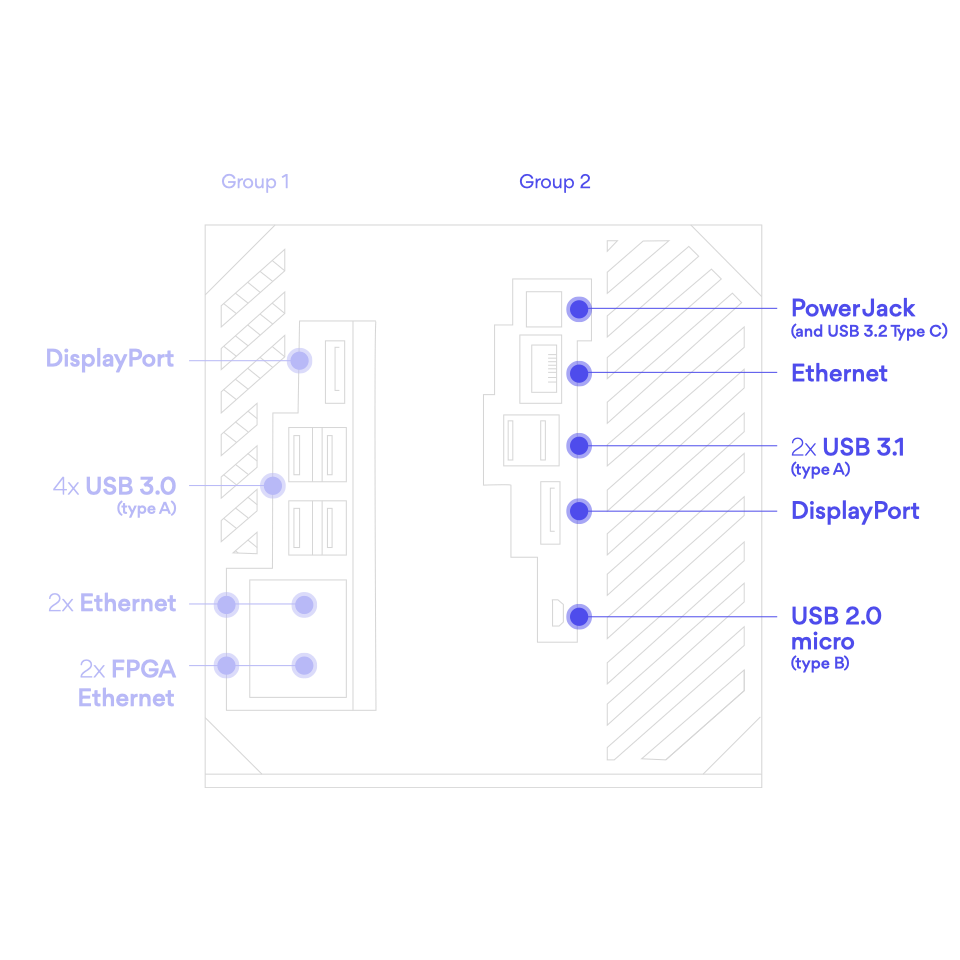

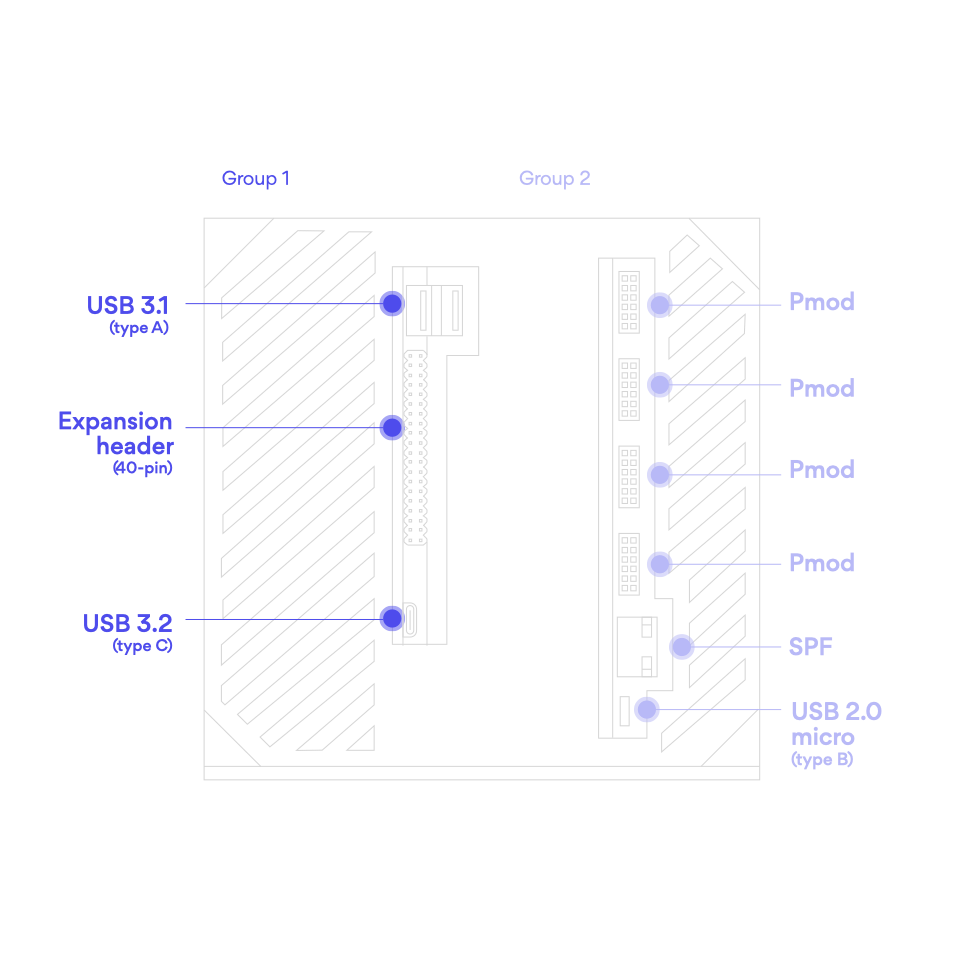

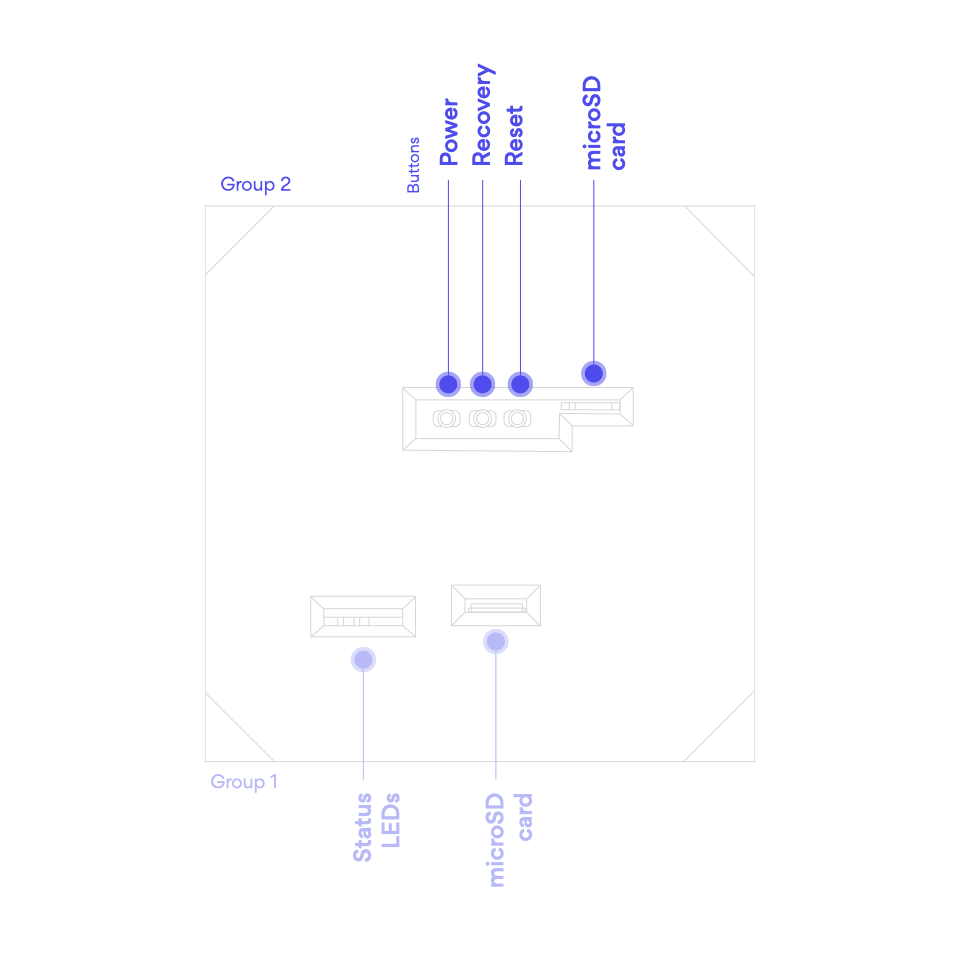

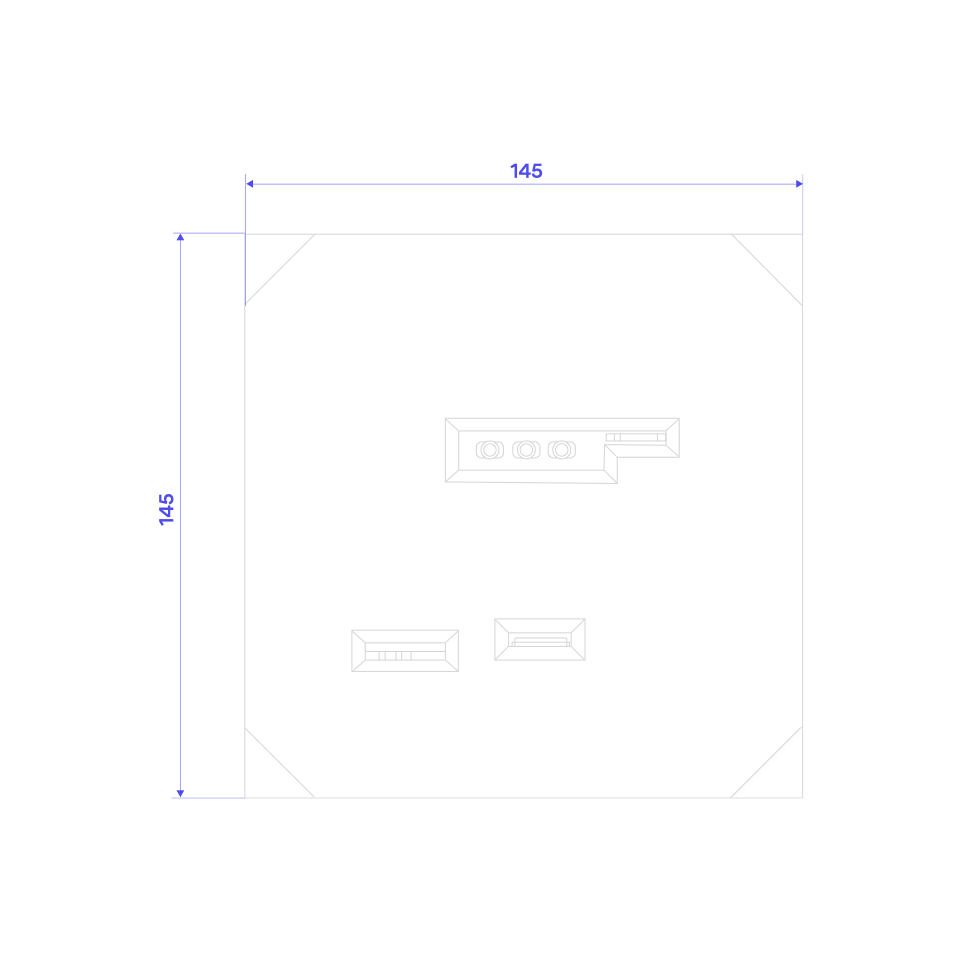

ROBOTCORE® is a robot-specific processing unit (RPU) that helps map Robot Operating System (ROS) computational graphs to its CPUs, GPU and FPGA efficiently to obtain best performance. It empowers robots with the ability to react faster, consume less power, and deliver additional real-time capabilities.

Get ROBOTCORE® Build your own